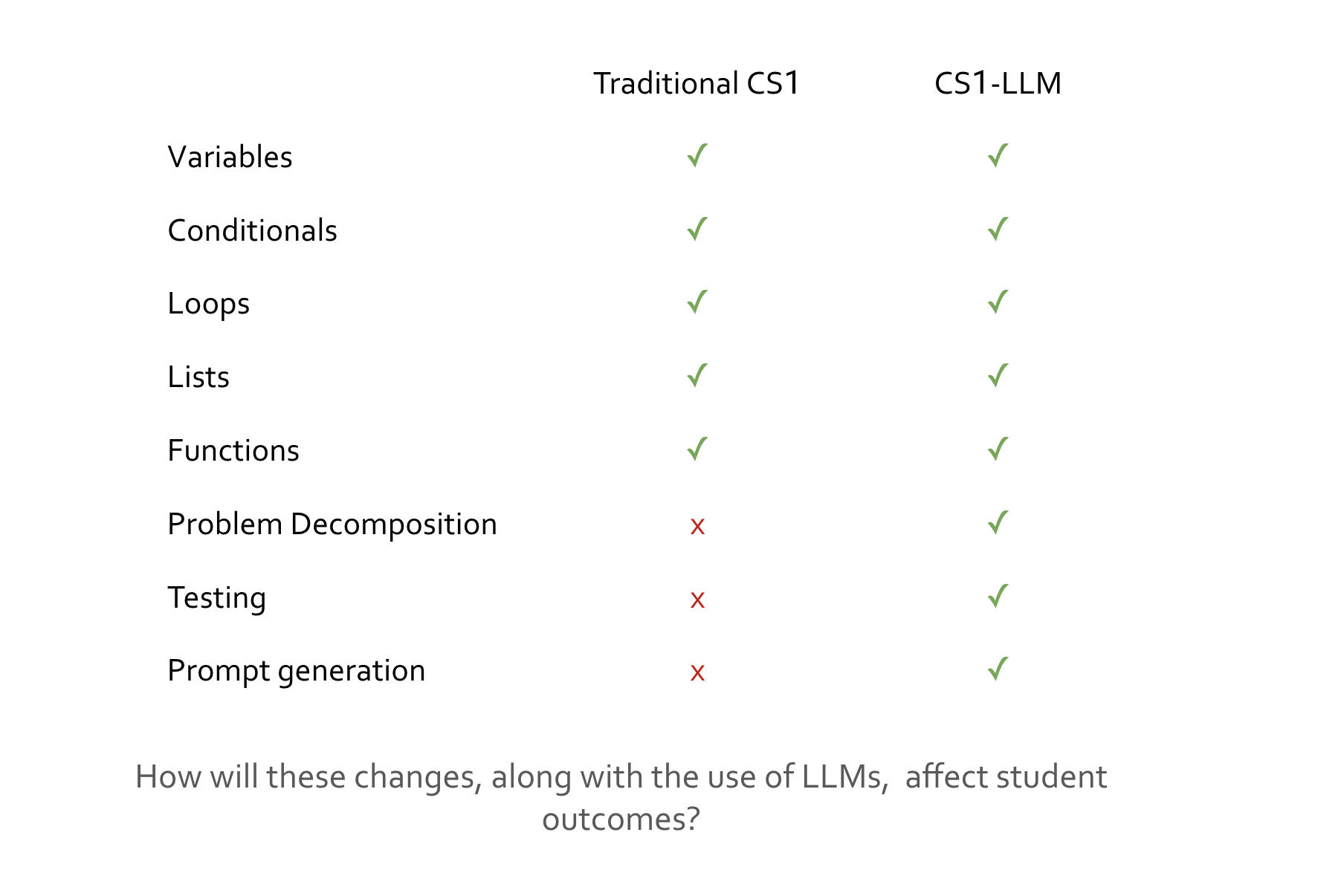

Large language models (LLMs) are being used increasingly in computer science in general, and classrooms in particular. Plugins such as Github Copilot leverage LLMs and provide code suggestions in real time, and it is unclear how this impacts students' learning of fundamental computing principles. Our objective is to compare student outcomes in an LLM-augmented introductory computer science class (CS1-LLM) as compared with a traditional introductory computer science class (CS1), specifically examining differential outcomes across demographic backgrounds. We are developing a novel introductory CS course led by Prof. Leo Porter, UCSD, and Prof. Dan Zingaro, University of Toronto. A key component of CS1-LLM is its instruction on the use of LLMs (specifically Github Copilot) to help write effective code, including guidance with the basics of prompt engineering. A second key property of CS1-LLM is its accelerated pace. Our course assumes that students will use Copilot to solve typical CS1 problems quickly. It therefore covers basic programming topics that typically span an entire course (e.g. variables, conditionals, loops, and functions) in the first few weeks. The freed course time will be spent on skills such as problem decomposition, testing, and debugging that are not traditionally taught in CS1 courses. Students in CS1 have formerly needed to acquire these skills without explicit instruction; such student-discovered knowledge is referred to as “hidden curriculum”, and it can be a barrier to students from underrepresented backgrounds.

Large language models (LLMs) are being used increasingly in computer science in general, and classrooms in particular. Plugins such as Github Copilot leverage LLMs and provide code suggestions in real time, and it is unclear how this impacts students' learning of fundamental computing principles. Our objective is to compare student outcomes in an LLM-augmented introductory computer science class (CS1-LLM) as compared with a traditional introductory computer science class (CS1), specifically examining differential outcomes across demographic backgrounds. We are developing a novel introductory CS course led by Prof. Leo Porter, UCSD, and Prof. Dan Zingaro, University of Toronto. A key component of CS1-LLM is its instruction on the use of LLMs (specifically Github Copilot) to help write effective code, including guidance with the basics of prompt engineering. A second key property of CS1-LLM is its accelerated pace. Our course assumes that students will use Copilot to solve typical CS1 problems quickly. It therefore covers basic programming topics that typically span an entire course (e.g. variables, conditionals, loops, and functions) in the first few weeks. The freed course time will be spent on skills such as problem decomposition, testing, and debugging that are not traditionally taught in CS1 courses. Students in CS1 have formerly needed to acquire these skills without explicit instruction; such student-discovered knowledge is referred to as “hidden curriculum”, and it can be a barrier to students from underrepresented backgrounds.We aim to answer the following questions:

RQ1: How do student outcomes in CS1-LLM compare with traditional CS1?

RQ2: How do the differences in outcomes across demographics in CS1-LLM compare to traditional CS1?

We are collecting data from surveys and assessments (e.g. exams and homework). We will gather data both between courses (CS1 from Fall 2022 vs. the current Fall 2023 CS1-LLM) and within CS1-LLM. From 2022, we have data about student demographics, perceptions (through surveys), grades, and assessment performance. We will collect analogous data from the current CS1-LLM offering. Among the programming skills assessed in both courses (e.g. use of conditionals, loops, and functions), we will analyze performance differences. We will also analyze how outcomes and attitudes for students from underrepresented backgrounds differ between CS1 and CS1-LLM as compared to those from highly-represented backgrounds. Within the course, we will examine survey and assessment performance data to conduct similar analyses about how students of different backgrounds fare in CS1-LLM. We will compare this to the within-course analysis of the 2022 offering of CS1.